A Simple Method for Weakly Supervised Segmentation

Purchased from Istock.com.

Semantic Image Segmentation

Semantic image segmentation—drawing the contours of an object in an image—is a highly active topic of research, and very relevant to medical imaging. Not only does it help to read and understand radiological scans, it can also be used for the diagnosis, surgery planning and follow-up of many diseases, while offering an enormous potential for personalized medicine.

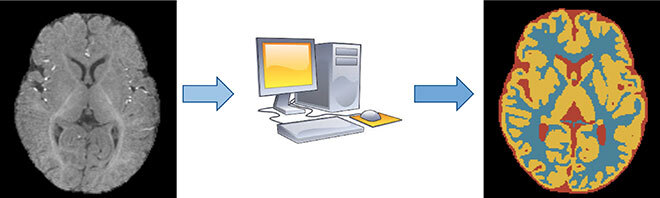

In recent years, deep convolutional neural networks (CNNs) have dominated semantic segmentation problems, both in computer vision and medical imaging, achieving groundbreaking performances, albeit at the cost of huge amounts of annotated data. A dataset is a collection of examples, each containing both an image and its corresponding segmentation (i.e. ground truth), as seen in Figure 1. With optimization methods such as the stochastic gradient descent, the network is trained until its output matches the ground truth as closely as possible. Once the network is trained, it can be used to predict segmentations on new, unseen images.

Labelling Data Is Expensive

In the medical field, only trained medical doctors can confidently annotate images for image segmentation, making the process very expensive. Furthermore, the complexity and size of the images can make the annotation slower—taking up to several days for complex brain scans. Therefore, more efficient methods are needed.

An attractive line of research consists in using incomplete annotations (weak annotations) instead of full pixel-wise annotations. Drawing a single point inside an object is faster and simpler in orders of magnitude than drawing its exact contour. It is not, however, possible to use those labels directly to train a neural network, as the network does not have enough information to find an optimal or even satisfactory solution.

Figure 1: Examples of full and weak annotations. In red are the pixels labelled as background, and in green the pixels labelled as foreground: what is to be segmented. The absence of color (in the weak annotations) means that no information is available for those pixels during training

Our Contribution: Enforcing Size Information with a Penalty

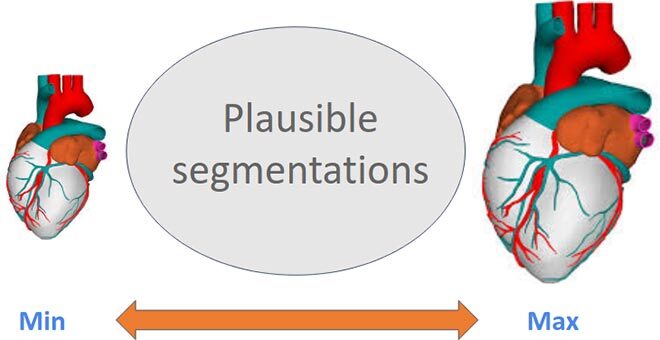

Nevertheless, more information on the problem exists, which is not given in the form of direct annotations. For instance, we know the anatomical properties of the organs that we want to segment, such as the approximate size:

Figure 2: By knowing the approximate size, we can guide the network toward anatomically feasible solutions

We can therefore mend the training procedure, forcing the network to not only correctly predict the few labelled pixels, but also to make sure that the size of the predicted segmentation is among the a prior known anatomical plausible solutions. This is known as a constrained optimization problem, which is a well-studied field in general (with Lagrangian methods), but seldom applied to neural networks. The sheer size of the network parameters, datasets, and requirements to alternate between different training modes makes it impractical at best.

Our key contribution was to include these constraints into the training process as a direct and naive penalty. Thus, the network has to pay a price each time the predicted segmentation size is out of bounds (the bigger the mistake, the bigger the penalty), with no consequence when bounds are respected.

Results and Conclusion

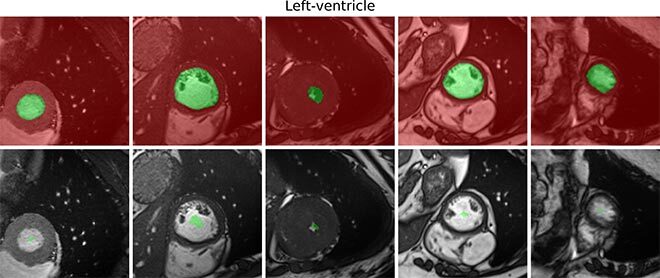

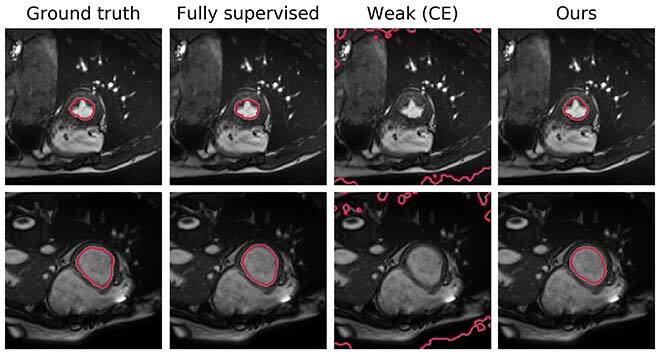

Surprisingly, and despite being contrary to any textbook on constrained optimization, our naive penalty for deep networks proved to be much more efficient, fast, and stable, than more complex Lagrangian methods. Taking the size information into account during training—basically adding a single value—allowed us to almost close the gap between full and weak supervision in the task of left-ventricle segmentation, while using only 0.01% of annotated pixels.

Figure 3: Visual comparison of the results. In the usual order: label, training with full labels, training naively with weak labels, our method

Additional Information

More technical details can be found in the full paper, describing different settings across several datasets: Kervadec, H.; Dolz, J.; Tang M.; Granger, E. Boykov, Y.; Ben Ayed I. 2019. “Constrained-CNN losses for weakly supervised segmentation” Medical Image Analysis. Volume 54. pp. 88-99.

The code, available online, is free for reuse and modification: https://github.com/liviaets/sizeloss_wss.