Robots with a Sense of Touch

The featured image was bought on Istock.com. Copyrights.

Editor’s Note

This article is based on a public presentation by Professor Vincent Duchaine of the École de technologie supérieure (ÉTS) in Montréal, as part of CBC’s La Sphère, hosted by Mathieu Dugal on October 1, 2017.

——————————–

Robots are traditionally made of metal and are very strong. Even when their strength is set to the lowest threshold, they’re unable to grasp delicate objects – like empty eggshells or Styrofoam cups – without crushing them, because they cannot sense the object. My team of researchers is working to equip these robots with an artificial skin that can measure pressure exerted at any point of contact. This silicone skin is not composed of discrete sensors; it’s more like a touch screen, except it’s also flexible and extendable.

Evaluating a Robot’s Grip with Pressure Imaging

The manufacturing industry requires robots that can pick up objects with different shapes and textures. But how can a robot be taught to evaluate its grip on an object? How can it detect whether it has successfully grasped an object, or whether the object is slipping away?

Figure 1 Objects used for testing

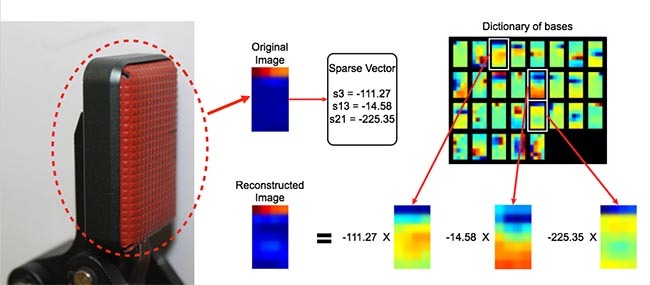

To answer these questions, we ordered around 100 different objects from Amazon. One student had a robot pick up each of these objects about 1000 times. At each try, a pressure image of the robot’s grip was generated (see Figure 2 below) and the student noted whether or not the grip was stable. These images, combined with the student’s observations, constituted a dictionary that the robot could quickly refer to in order to judge the stability of its grip. This method is called sparse coding.

Figure 2 Sparse coding method

The success rate of this method reached 93%, which is one of the best rates achieved so far. However, there’s still a lot of work to be done in order to integrate this system in factories where a near-100% success rate is necessary. One reason why we didn’t reach 100% is because the sense of touch alone is not enough to determine whether or not a grip is stable. In fact, human beings also use their vision to do this.

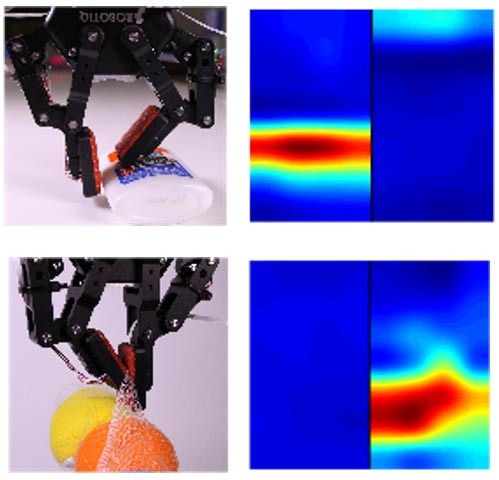

Figure 3 Examples where eyesight is needed to properly assess grip

Determining with Sound if an Object Is Slipping

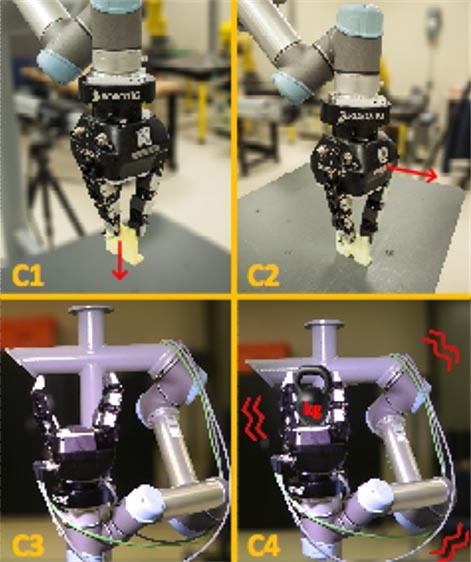

Another question the research team tried to answer is: how can a robot detect whether the object it holds is slipping? To answer this, they used vibration sensors, or devices that can detect sound. The detected vibrations can come from three sources:

- slippage of a gripped object against the robot’s hand (Figure 4, C1 image);

- slippage of a gripped object against another surface (Figure 4, C2 image);

- the robot’s movements (Figure 4, C4 image).

Figure 4 Sources of detectable vibrations

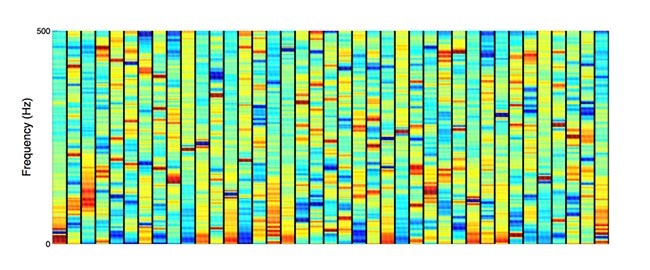

The bigger issue here is how to distinguish between different types of vibrations in order to isolate cases where an object is slipping against the robot’s hand. The sparse coding method was used again, except this time the dictionary contained sounds, in a way that’s similar to how Apple’s Siri system accomplishes voice-recognition.

Figure 5 Vibration Dictionary

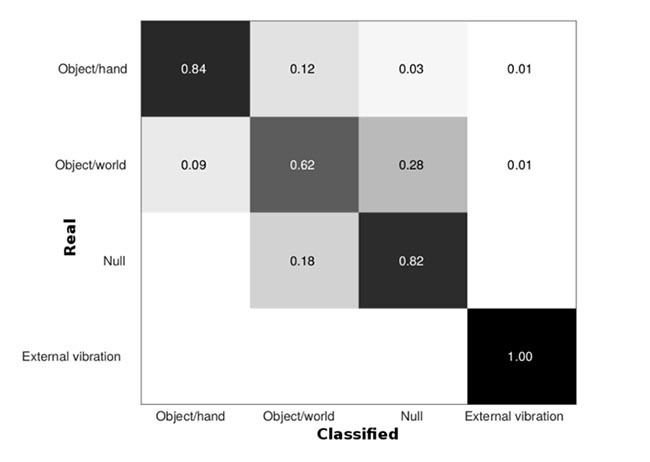

Cases of vibrations caused by robot movements were fully catalogued, as were cases where no vibration is perceptible. Cases where objects slipped from the robot’s hand were identified at a rate of 84%.

Figure 6 Resulting vibrations classification

This result is very encouraging, but again, much work remains to be done before these robots can be implemented in factories.