Blockchain-Based Federated-Split Learning

Purchased from Gettyimages. Copyright.

Federated Learning (FL) allows clients to train models locally in an iterative and collaborative manner. However, some clients, i.e. IoT devices, may not be able to fully participate in training due to limited computing resources. This will affect global model accuracy and training speed, since these clients might possess valuable training data. To address these issues, our paper presents Block-FeST, a blockchain-based federated-split learning framework for transformer-based anomaly detection, which incorporates the strength of Federated and Split Learning (FSL). FL is used to mitigate data privacy issues, while Split Learning (SL) is used to offload constrained clients from computational overhead to a central server. Moreover, the use of a blockchain will generate an audit trail that can be used to address any challenges from the clients to take corrective actions.

Introduction

Internet of Things (IoT) devices generate a massive amount of data on a regular basis. This data has the potential to revolutionize every sector by developing intelligent systems. Unfortunately, the data is inaccessible due to privacy concerns. As a solution, Google proposed a decentralized machine learning approach, namely federated learning.

FL trains a Deep Learning (DL) model distributed over several clients. In each round of an FL instance, a subset of clients must train a local model and send an update message to a central server. A new global model is calculated and delivered back to all clients by the central server at the end of the round. This process continues until the global model achieves convergence.

However, clients with limited resources may fail to submit their local model on time due to reduced computing resources. This will affect global model accuracy and training speed since these clients might possess valuable training data. Furthermore, in the traditional FL system, the server can spam global models by endorsing its peers.

A Proposed Block-FeST Protocol

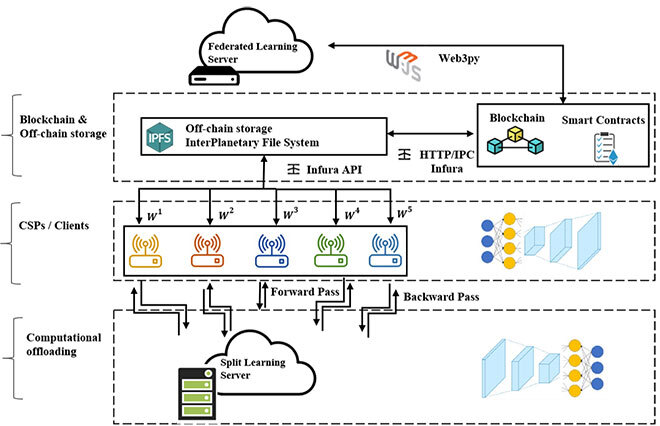

Block-FeST consists of four phases: initialization, split learning, aggregation, and updating. First, The FL server initiates the FL process, starting the first FL round by sending the initial global model to all clients, as shown in Figure 1. Each client begins training its local model using its personal data. Clients perform training by sharing their computational overload with the split learning server. They train half of their local model on the SL server. After completing the clients’ local model training, the SL server sends it back to the clients. Off-chain storage, i.e. the Interplanetary File System (IPFS) stores the client’s local models. However, the hash of the local models will be stored on the blockchain to avoid tampering. The local models will then be aggregated by the FL server. The FL server retrieves the local models from the IPFS at the end of each round. In the updating phase, the FLServer will use the FedAVG algorithm to aggregate all local models to form a global model. Every FL round will end with a blockchain that sends the modified global model to clients to begin the next FL round. This process continues until specific criteria for convergence are met. The blockchain-based FL server ensures transparency and immutability by publicly verifying local/global models.

Figure 1: Proposed Architecture

Validating the Proposed Approach

We took a case study of a Communication Service Provider (CSPs) infrastructure where different CSPs use their local data to train a federated model. These CSPs monitor Key Performance Indicators (KPIs) such as cell downtime, latency, and throughput to detect anomalies, which are often caused by sudden traffic spikes or equipment failures.

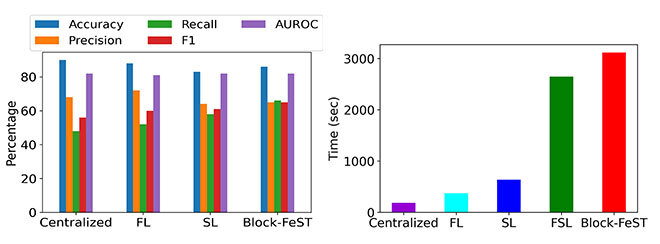

We evaluated Block-FeST against known centralized and decentralized baselines, namely FL, SL and FSL. The centralized solution achieved accuracy up to 0.90 in 20th epochs, as illustrated in Figure 2. Finally, our proposed setting, i.e. Block-FeST, achieved accuracy up to 0.86. Moreover, Block-FeST achieved a precision of 0.65, a recall of 0.66, and an F1-score up to 0.65. Figure 3 shows that the Block-FeST experienced a delay of up to 15% compared to the centralized approach.

Figure 2: Baseline Comparison Figure 3: Execution Time

Conclusion

Block-FeST enables resource-constrained clients to participate in IoT-based real-time anomaly detection systems. Our solution ensures a more robust AD model for critical KPIs—such as latency, throughput, and cell downtime—allowing Ericsson to respond more proactively to cell outages. Moreover, the use of blockchain will orchestrate the training process while maintaining transparency and traceability.