Artificial Intelligence Assisting Orthopaedic Imaging

Provided by the authors. CC Licence

Correctly identifying bone structures on X-rays is an important step in some orthopaedic procedures. This seemingly simple task is executed mainly by radiologists before more complex procedures are performed. Automatic identification of bones in X-rays—especially when the field of view and patient orientation are not initially known and when structures overlap—is a difficult task. The aim of this work is the automatic identification of lower limb bones in X-rays with different fields of view and two patient orientations. The proposed method uses data augmentation to improve training of a deep learning method (SegNet) making the identification of lower limb bones possible. From a validation database of 60 X-rays, we obtained a Dice coefficient of 93.85 +/- 0.02%, proving the relevance of the proposed method. Keywords: Bone structure identification, semantic segmentation, X-ray

A More Accurate Method of Bone Identification

Many orthopaedic procedures involving lower limb bones require X-ray imaging of the patient in a standing position to correctly estimate clinical parameters (e.g. neck-shaft angle, femoral-tibial angle) important in the decision-making process. These clinical parameters are extracted by identifying bones in images [1]. Automated methods for the implementation of this task [2] [3] are described in the literature, but most were tested on X-rays with the same field of view and patient orientation, or were dedicated to identifying a single structure [2] or relatively uncomplicated images (e.g. antero-posterior image analysis) [2].

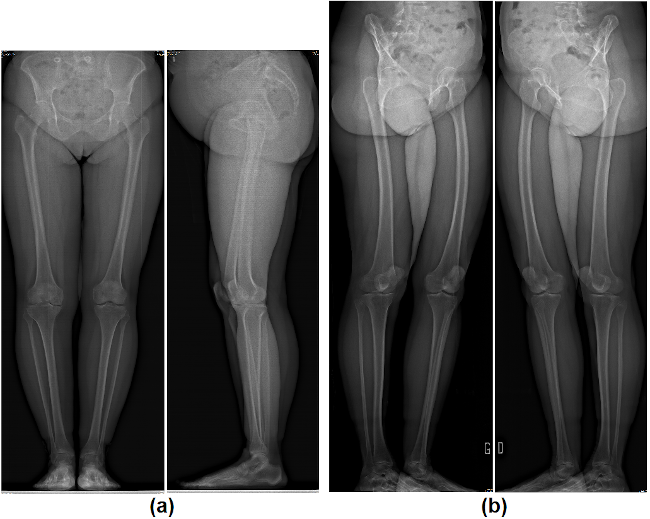

In this article, we propose an identification method for bone structures in X-rays with different fields of view (lower limb images, full-body images and images where the target bone is partially visible) and two patient orientations (0°/90° and 45°/45°), requiring no human intervention. The implemented solution was applied to X-rays acquired through EOS [4] and allowed a precise and robust extraction of four lower limb bones (femur and tibia) despite significant challenges with overlapping bone and muscle structures.

Figure 1 (a) 0°/90° orientation X-rays (b) 45°/45° orientation X-rays

Proposed Data Augmentation Method

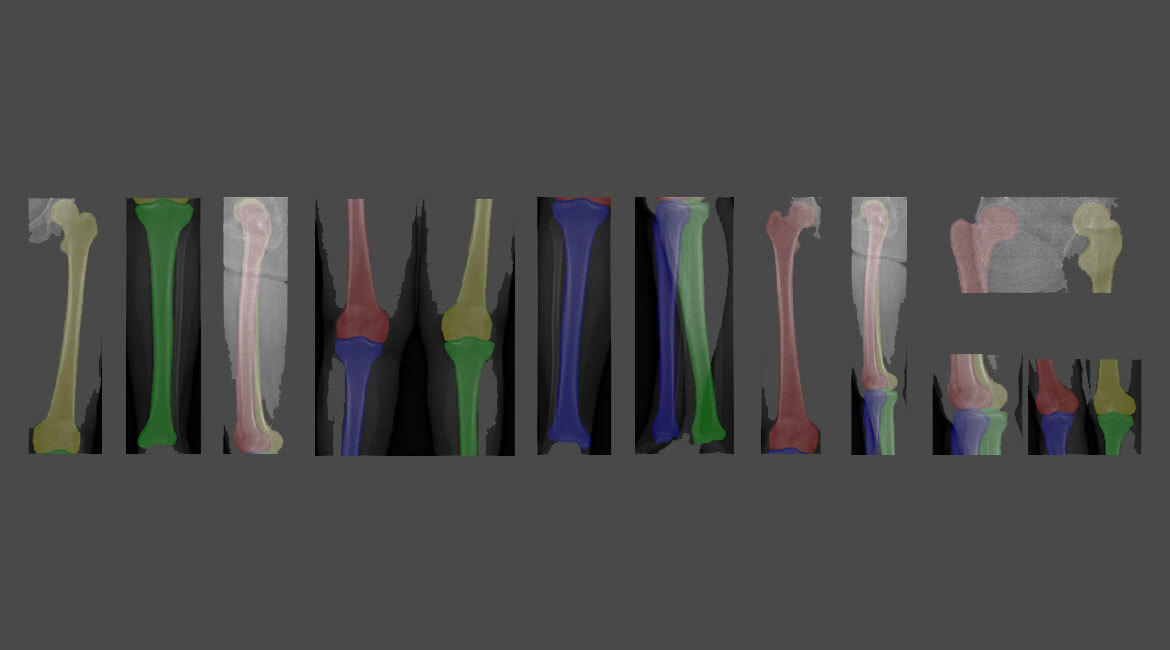

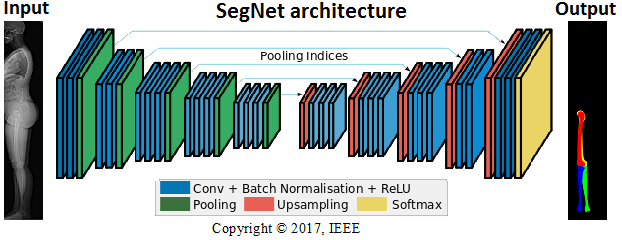

To automatically identify the four bones, we first generated ground truth masks by identifying the contours of each bone structure on the related X-rays (180 in total). From these contours, we created different coloured masks for each structure. In order to improve the training performance of the neural network (SegNet [5]), a data augmentation strategy was proposed to obtain a training database of 43,960 images. The data augmentation strategy was based on 3 transformations: scaling (uniform and non-uniform), cropping, and sliding windows. In the learning phase, the network started with an image and the related mask to learn the characteristics of the target structure in the image, allowing it to estimate the mask. In the test phase, the network started with an image and then generated an output mask, related to the target bone structure, based on the characteristics discovered during the learning phase.

Figure 2 Architecture of the SegNet method

Results of the SegNet Method

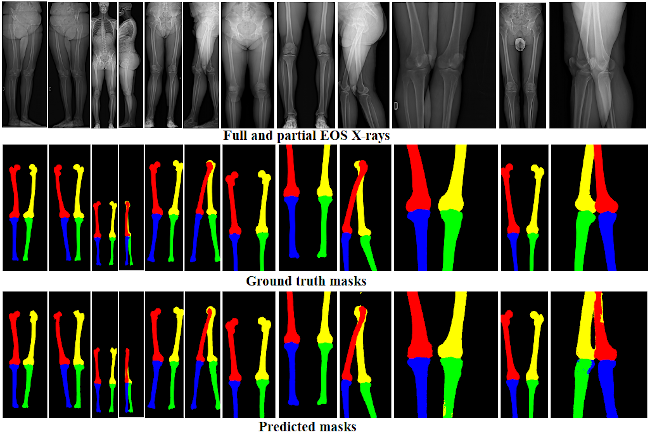

For the evaluation phase, we used a database of 60 X-rays acquired through the EOS system. The Dice coefficient served as an evaluation metric to measure the similarity between the ground truth mask and the predicted mask. We obtained a Dice coefficient of 93.85 +/- 0.02%. The figure above shows some of the obtained qualitative results.

Figure 3 Comparisons between ground truth masks and predicted masks using the SegNet method

Conclusion

In this work, four lower limb bones were automatically identified on X-rays with varying fields of view and different patient orientations. This solution will allow clinicians to focus on much more useful tasks in the personalized treatment of patients.

Additional information

For more information on this research, please refer to the following lecture article:

Olory Agomma, R., Vázquez, C., Cresson, T. and de Guise, J., 2019. “Detection and Identification of Lower-Limb Bones in biplanar X-Ray Images with Arbitrary Field of View and Various Patient Orientations.” In 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019).