In Search of Light Paths – Computer Generated Images

Purchased on Istockphoto.com. Copyright.

Computer-generated images have undergone a tremendous revolution in the last twenty years. Once obtained through empirical methods, they are now produced with sturdy tools that rely on various fields of science, such as computer science, mathematics, physics, and human visual perception.

Originally used in video games and movies, CGI applications now extend to many other fields. They can be used as much to generate furniture catalogues as to train professionals for complex tasks with simulators (e.g. surgery simulator). Coupled with artificial intelligence algorithms, computer graphics are a must for training robots in factories and self-driving cars.

Many aspects must be considered to create a photorealistic image—an image where we cannot determine whether or not it is real. Central to these features are light-matter interactions, which form the core of our research. Our goal is to develop efficient, robust and automatic algorithms that reproduce the behaviour of light in any 3D environment as accurately as possible.

Complex Interactions

Light sources are electromagnetic rays defined by spectra of different wavelengths. When a light ray strikes an object, it can be partially reflected, refracted, scattered or absorbed. To achieve photorealism, we must therefore develop lighting models to render these effects as accurately as possible.

In practice, we work on a digital version of a virtual world, where each object is modeled to take a shape and a location. Colour and texture are also linked to these objects to model the spatial variation of their appearance. Finally, light sources are placed in the set and the location of the shot is determined by a virtual camera.

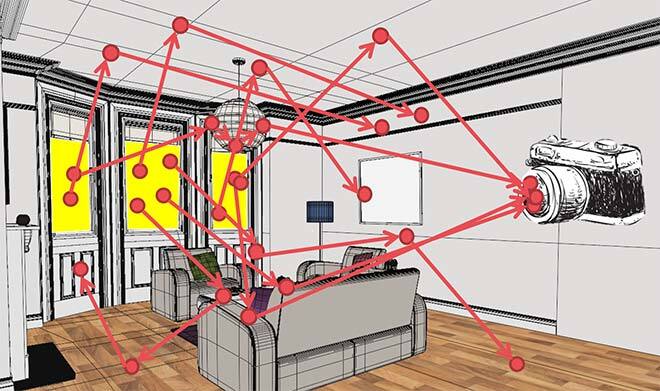

Rendering algorithms calculate how light propagates in this environment, either directly or by bouncing off different objects. The different directions of light form what are called light paths.

Illustration of light paths in a 3D scene

Stochastic algorithms generate paths randomly to estimate transport in a 3D environment. If the algorithm manages to simulate a very large number of light paths, the resulting image will be almost perfect. However, each of these paths requires enormous computational power, so it is necessary to set limits by focusing on the paths with the most significant contribution to the chosen camera shot. This is made possible by the Monte Carlo method.

Monte-Carlo Approach

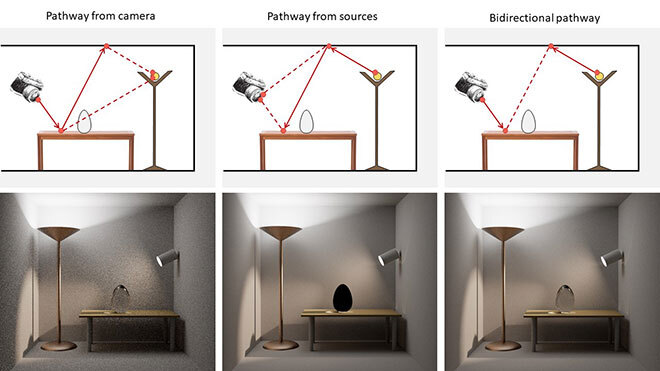

There are many ways to construct a light path. For example, it can be generated from a virtual camera, from a light source or even bidirectionally. The way the light paths are constructed will have a direct impact on the efficiency with which light transportation is estimated. The following figure shows the images obtained with different sampling techniques.

Impact of sampling techniques on scene rendering

In this example, the paths coming from the camera produce an image with lots of noise, because it is difficult to generate paths going to the main light source. The paths coming from the source fail to render the object on the desk (black shape), because the material of the object only reflects light in a finite number of directions. Here, by combining the two approaches and allowing the paths to connect arbitrarily, the bidirectional tracing method generates the best rendering for the same computation time.

Metropolis-Hasting Approach

Some scenes are more complex to generate because they require multiple of light’s bounces, or contain geometric or optical constraints restricting the passage of light paths. These scenes, with a more limited number of contributing paths, are not sampled efficiency with simple uniform sampling. In other words, searching for paths in such scenes is like looking for a needle in a haystack.

This problem can be overcome with the Metropolis-Hasting approach, which makes it possible to explore locally the space between contributing paths. To do so, a slight mutation is applied to the previously found contributive path in order to discover a new one. The newly discovered path sequences, forming a Markov chain, will then be processed in a Monte-Carlo estimator.

Improvement of rendering using the Metropolis-Hasting approach

Different Types of Image Rendering

In addition to forward rendering—creating an image based on a description of the scene, the objects within it and the desired lighting—the reverse process could also be performed, i.e., finding from an image the parameters that produced it.

This process is called inverse rendering and has many applications in machine learning and computer vision. An example is capturing the appearance and geometry parameters of real objects to incorporate them into a virtual world. These applications are based on iterative optimization processes using gradient estimates of scene parameters. The gradient estimates are used to modify a base image until the desired image is achieved.

There is a long history of using gradients in image synthesis. For example, gradient-domain rendering methods directly estimate gradients in the image plane to better sample locations in the image where a significant change occurs, such as the outline of an object. With this technique, such changes—few in number in an image compared to homogeneous areas—will benefit from more correlated light paths without requiring a great increase in computation.

The connection between these different gradient estimation processes is a very active research topic in the field of image synthesis.

More Realistic Computer-Generated Images

The main objective of image synthesis is to reproduce the interaction between light and objects in a 3D scene faithfully and efficiently. Faithfully, so as to simulate the laws of physics while taking into account the perception of the human visual system; efficiently, so as to estimate a specific pixel value at a reasonable cost. These two requirements are now achievable thanks to algorithms derived from the Monte-Carlo and Metropolis-Hasting methods. We are now working on developing the next generations of synthetic image algorithms to push the limits of rendering even further.