AI vision systems often perform less effectively when faced with samples that are visually different from those used during training. For example, an autonomous driving system trained in clear summer conditions may suddenly need to navigate snowy winter roads, at night. Our research introduces Feedback-Guided Domain Synthesis (FDS), a method that uses advanced image-generation models to create realistic, previously unseen variations of objects and scenes. By training AI on this richer and more diverse set of samples, we improve its ability to interpret unfamiliar conditions, paving the way for more reliable performance in applications ranging from autonomous driving to medical imaging.

Why AI Needs to Handle Unseen Domains

AI vision systems are increasingly used in safety-critical applications, from self-driving cars to medical diagnosis. In these settings, the visual conditions at deployment often differ from those in the training data, a phenomenon known as domain shift. Even small changes in lighting, texture, style or camera settings can make these systems less reliable. Traditional solutions either adapt the system after deployment—which requires extra time, manual annotation effort, and associated costs—or try to simulate new conditions using simple image manipulations, which rarely capture the full complexity of real-world variations. A more powerful and realistic way to prepare AI for unseen domains is needed.

Our Approach

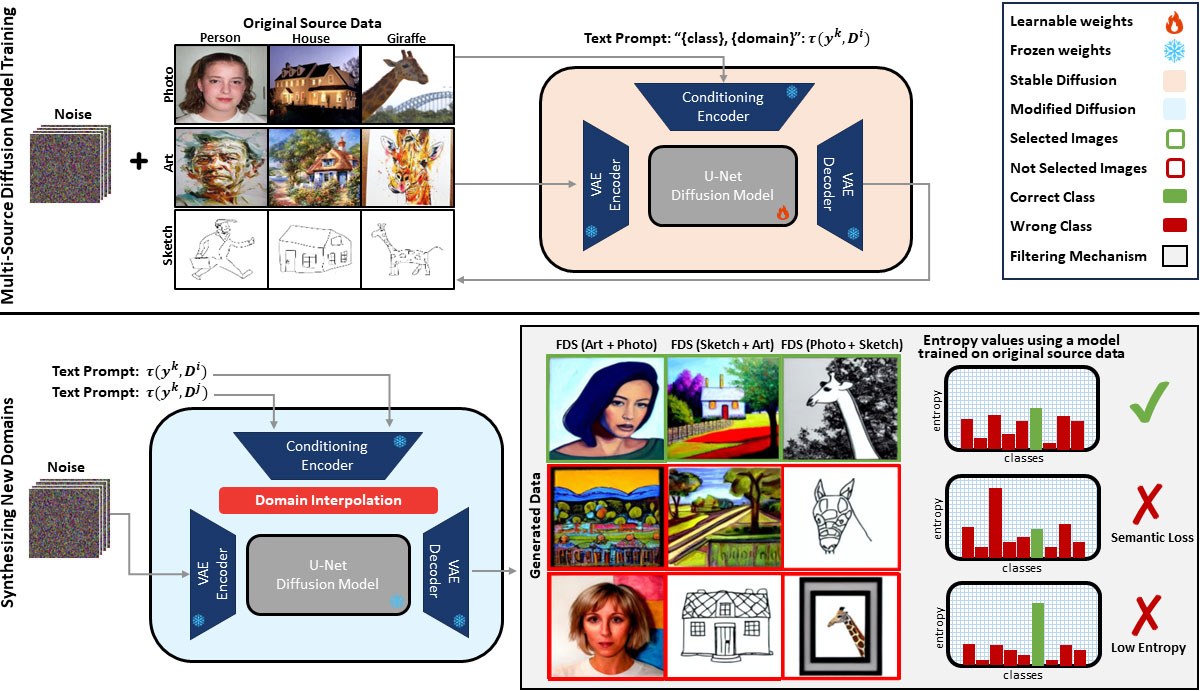

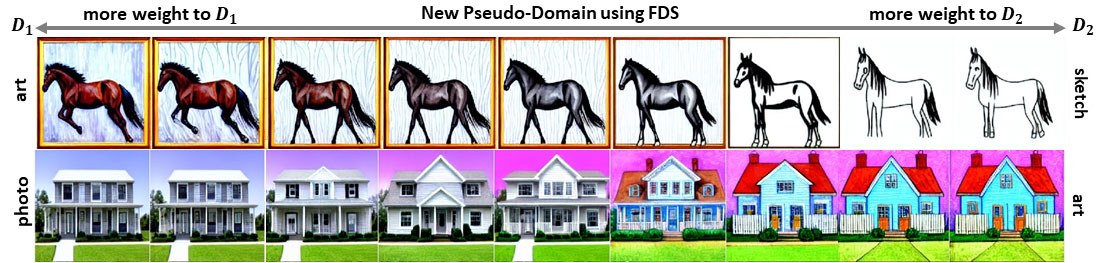

We developed Feedback-Guided Domain Synthesis (FDS)—a method that uses advanced image-generation technology, known as diffusion models—to prepare AI vision systems for unfamiliar conditions. Rather than relying solely on existing training data, FDS generates new realistic pseudo-domains by blending characteristics from multiple known domains. For example, by merging the visual style of photographs with the texture and structure of hand-drawn sketches. A feedback mechanism then evaluates these synthetic images and selects those that are most challenging for the AI, This ensures the training process focuses on the examples that will teach AI the most.

By learning from these diverse and realistic pseudo-domains, the AI is encouraged to focus on domain-invariant features, the aspects of an object that remain constant regardless of style or visual presentation. This ability to recognize what truly defines an object, rather than its superficial appearance, is what makes the system more robust when it encounters entirely new domains after deployment.

Results and Impact

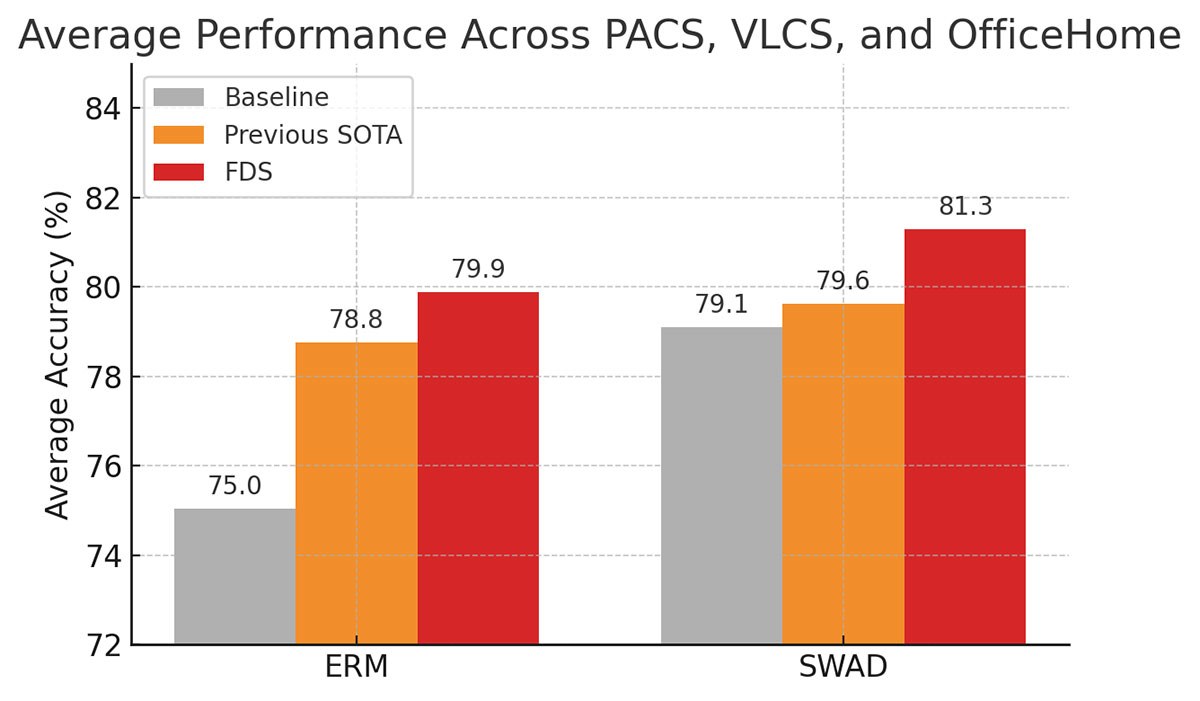

We evaluated FDS on three widely used benchmarks for domain generalization: PACS, VLCS, and OfficeHome. In each case, the AI was trained on several source domains and tested on a completely unseen domain. Compared to strong baseline methods, FDS consistently delivered higher performance, setting new state-of-the-art records in multiple scenarios.

Importantly, these gains were achieved without increasing the complexity of the model at deployment. The improvements come entirely from better training, thanks to the realistic and diverse pseudo-domains generated during the process.

For real-world applications, this means AI systems trained with FDS are better prepared to handle new environments from the outset. Whether it’s recognizing an object in a new artistic style, identifying products from a different manufacturer, or interpreting medical images from unfamiliar equipment, FDS helps maintain reliability when conditions change.

Proposed Method at a Glance

- Show that diffusion models can be trained on multiple source domains to generate realistic pseudo-domains.

- Propose a feedback-based strategy to select the most challenging synthetic images, focusing training on what improves robustness the most.

- Achieve state-of-the-art performance on standard domain generalization benchmarks.

- Maintain the same deployment complexity, no additional cost at inference time.

Conclusion and Future Outlook

Our method, FDS, creates realistic pseudo-domains that help AI vision systems focus on features that remain consistent across environments, making them more reliable in unfamiliar conditions. Although developed for image classification, the principles behind FDS could be adapted to other vision tasks, such as object detection or segmentation, with appropriate modifications. As AI takes on more safety-critical roles, methods like FDS will be essential for maintaining dependable performance when the world looks different from the training data.

Additional Information

For more information on this research, please read the following paper:

Feedback-Guided Domain Synthesis with Multi-Source Conditional Diffusion Models for Domain Generalization. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025.

Acknowledgement

We are grateful to our collaborators and supporters who have made this research possible.